How to assess the risks of both small and large AI projects

There is no shortage of ideas for applications, especially with generative AI. But are the legal requirements and risks adequately taken into account? In this article, we explain how corresponding risk and compliance assessments can be carried out. We have also developed a free tool for smaller and larger AI projects, which we will discuss here. This is part 4 of our AI series.

We are hearing from a number of companies that legal departments, data protection departments and compliance officers are being overwhelmed with ideas for new projects and applications with generative AI that they are asked to assess on short notice. In some cases, it is obvious what needs to be done: If a provider is used and granted access to personal data, for example, a data processing agreement is required – as in all cases where data processors are used. But what other points, especially those specific to AI, should be checked for compliance purposes in the case of an AI application? Will the EU's AI Act even play a role? And can this be assessed uniformly for all applications?

Indicators for high-risk AI projects

Our experience in recent months shows that many of the AI projects that are being implemented in companies do not yet entail really high AI risks. Certain homework has to be done, as with any project, but the applications are usually not particularly dangerous. For reasons of practicability alone, a distinction must therefore be made when assessing AI projects as to how many resources should be invested in their evaluation. As we already do so in the area of data protection, an in-depth risk assessment is only necessary if an AI project is likely to entail high risks for the company. It is true that AI projects still raise many new questions in which compliance and legal departments in particular often have no experience. However, this does not necessarily mean that such projects are particularly risky. Our clients therefore have asked us for criteria that can be used for triage.

The following questions can help to identify such high-risk projects:

- Will we create or further train the AI model we use for our application (with the exception of retrieval augmented generation methods)?

- Will we let our application take decisions on other people that they will find important?

- Will the application interact with a large number of people concerning sensitive topics?

- Would we consider legal steps if a 3rd party used such an application against us or with our data?

- Does the application have the potential to cause negative headlines in the media ("shitstorm")?

- Does the application qualify as a prohibited or high-risk system as per the EU AI Act?

- Will we be using our AI application not only for our purposes, but also offering it to third parties?

- Does the application require a large investment, or is it of strategic importance?

If the answer to one of these questions is "yes", an in-depth review of the risks should be carried out or at least considered. In all other cases, at least a summary risk assessment of the project should be undertaken. We describe both approaches below.

Risk assessments of "normal" (i.e. non-high-risk) AI projects

For projects where high risks for the company are unlikely – we would refer to them as "normal" projects – we recommend that, in addition to the usual review for data protection compliance, they are subjected to a review with regard to the handling of confidential content or content requiring special protection (e.g. copyrighted works) plus a review of the typical risks associated with the use of generative AI. As most projects work with third-party AI models, the various challenges relating to the creation and development of AI models are not relevant here, which simplifies matters.

We have formulated 25 requirements that companies can use to check the risk situation with regard to the use of generative AI with such "normal" projects (depending on the application, there may be even fewer):

- Our use of 3rd party confidential or protected data is in line with our contractual obligations

- Our provider contracts are in line with data protection law and our other legal obligations

- Our input/output will not be monitored by our provider(s) or we are fine with it

- Our input/output will not be used by our provider(s) for their own purposes or we are fine with it

- We have ensured that our AI solution will not leak confidential data or personal data to others

- Our application neither systematically processes sensitive personal data nor does any profiling

- We have a sufficient legal basis for processing personal data (where legally required)

- We are using personal data only for one its original purposes (or one that had to be expected)

- We limit the personal data collected/used to the minimum necessary for the purpose

- We keep personal data in connection with the solution only for as long as needed

- We are able to comply with data subject requests (e.g., access, correction, objection, deletion)

- We have adequate data security and business continuity measures in place

- We inform people about our use of AI where this is relevant for their interaction with us

- The public and those affected by our use of AI will generally not find it unfair or objectionable

- We have measures to avoid or deal with erroneous or other problematic output (e.g., bias)

- Our application will be tested extensively prior to its use, including against adversarial use

- Our use of AI does not cause unintended repercussions for others (e.g., damage, discrimination)

- Our use of AI cannot be considered as being based on exploiting vulnerabilities of individuals

- We have human oversight where our AI solution could take or influence key decisions

- We will be using a widely recognized, quality AI model with a behavior we understand

- Our use of AI will neither mislead nor deceive anyone

- We have measures in place to detect any undesired behavior of our AI, to log it and to react to it

- Our use of AI respects the dignity and individual autonomy of those affected by it

- The users of our AI solution will be instructed, trained and monitored in its proper use

- We do not foresee any other uncontrolled issue in connection with our use of AI

If a company can confirm each of these points for a normal project, the most important and most common risks with regard to the use of generative AI appear to be under control according to current knowledge. Special legal and industry-specific requirements and issues are of course reserved.

If a requirement cannot be confirmed, this does not mean that the planned application is not permitted. However, the implementation of adequate technical or organizational measures should be considered to avoid or mitigate the respective risk – and the internal owner of the application must assume the residual risk.

Of course, depending on the project, applicable law and the company's own requirements (see our previous article on the 11 principles in this series) may give rise to further requirements and risks that need to be taken into account. In view of the large number of possible topics, the relevant rules are unfortunately not easy to identify – and with the additional AI regulation such as the AI Act (which we will cover separately) it will become even more difficult to ensure compliance especially for those offering AI-based products.

Also to be checked: Data protection impact assessment

As part of data protection compliance, it must also be checked whether it is necessary to amend the existing privacy notices, the records of processing activities (ROPA) and whether a data protection impact assessment (DPIA) must be carried out. Where service providers are relied upon, their role (controller, processor) has to be determined and contracts assessed (see, for example, part 2 of our blog series on what we found out with regard to popular AI tools)

Whether a DPIA is necessary can be checked using the checklist here. This checklist is based on the traditional requirements of the Swiss Data Protection Act and GDPR as well as the recommendations of the Article 29 Working Party (i.e. the predecessor of the European Data Protection Board), as there currently is no better established rule-set (although we believe it should be overhauled, as it creates too many false positive hits). In our experience, a DPIA will only be necessary where personal data is systematically collected for the purpose of having it processed by an AI for purposes related to particular data subjects (i.e. not for statistical or other non-personal purposes), where large amounts of sensitive personal data is being processed, where an AI solution could have significant consequences for the persons whose personal data is processed according to the purpose of the application, where people rely on the output generated by the system (e.g., chatbots on sensitive topics) or where there are considerable risks as per the criteria we described above.

For the implementation of a DPIA itself, we recommend the free template we developed for the Swiss Association of Corporate Data Protection (VUD), which is available here (another template is integrated in the GAIRA Comprehensive worksheet, see below).

If you are looking for assistance in applying the Swiss Data Protection Act to AI, the VUD provides guidance here (only in German).

Copyright-relevant content?

There are rules that need to be observed and risks that you need to be aware of not just for personal data. Anyone implementing an AI project, especially when using external providers and their solutions, should also check whether (other) confidential or other legally protected content is being used, in particular as input for an AI.

To that end, it should be checked whether the company has contractually undertaken not to include the content at issue for including in an AI application (and, thus, eventually disclose it to third party AI service providers) or to not use it in a particular manner. Such contractual obligations concerning AI will currently be rather rare, but we expect to see them more frequently in license agreements, for example, and less frequently in confidentiality clauses and non-disclosure agreements (NDAs). The reason for this is that companies in the business of making content available will want to protect themselves from such content being used to train third-party AI models. Conventional confidentiality agreements will usually not prevent the use of information for AI purposes or their disclosure to an AI service provider. However, if service providers are used, it must be checked whether their contracts contain the necessary confidentiality obligations and whether the input and output of their services can also be used for their own purposes. The latter may often already be in violation of most current licensing agreements if such AI solutions are used with copyrighted work, as most license agreements will permit companies to use licensed content only for their own internal purposes and, thus, not the training of third-party AI models.

It is also necessary to check whether data is used that is subject to official or professional secrecy or a comparable duty of confidentiality, for which special precautions are necessary, especially if such data must be disclosed to a service provider. In the latter case, special contractual and possibly also technical precautions will be necessary.

In a separate post in thin series we will discuss in more detail the copyright aspects of AI, including the question to which extent the use of AI generated content bears the risk of violating third party copyrights. Usually, machine generated content is not copyrighted, but where such content happens to include original works of humans, the situation is different. And, of course, the use of AI content generators can be used for copyright infringement or violations of other laws (e.g., trademark law, unfair competition) much like any content creation tool. The more difficult questions to be assessed is who becomes liable for such infringements in the value chain.

How to proceed with the assessment of "normal" AI projects?

For "normal" AI projects or AI applications, we recommend the following three steps:

- The owner of the project or application should be asked to document it, i.e. explain what it is all about, how AI is used, provide provider contracts, describe the technical and organizational measures taken, etc.

- The above indicators for high-risk AI projects should be used to determine whether an in-depth risk assessment is required or to document why this is not the case. This includes an assessment of whether the planned activity will be specifically covered by the upcoming EU AI Act, either as a prohibited practice or as a high-risk AI system.

- For all the requirements described above, it should be documented whether they have been complied with or whether the necessary measures have been taken or are planned to eliminate the risks mentioned or reduce them to an acceptable level. Here, the owner will of course be dependent on the help of internal specialist departments (2nd line of defense) such as the legal department, the data protection department and the CISO, e.g. when reviewing an order processing agreement, confidentiality clause or information security measures.

Once these three steps have been successfully completed, the owner of the project or application can decide on its implementation, save for any other preconditions that need to be fulfilled. We will discuss the governance aspects of this in a separate post in our series.

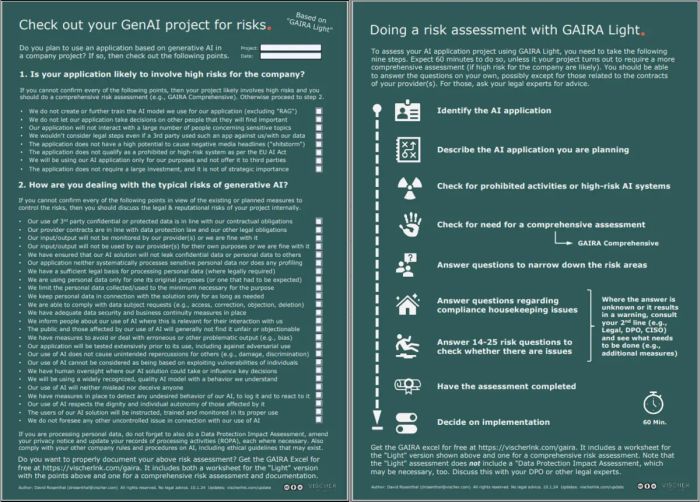

We have developed a template for carrying out and documenting the above process. It is available for free here as an Excel worksheet under the name "GAIRA Light". In addition, we have also created a one-page questionnaire (with the questions for identifying high-risk applications and the 25 requirements for "normal" projects shown above) as well as a quick guide for GAIRA Light (available here):

Here are some practical tips for filling out GAIRA Light (the tool itself also contains instructions and explanatory notes):

- Projects with likely high risks: The decision as to whether a project likely has high risks should be made by the owner, as he or she is ultimately responsible for it. This can be documented in GAIRA Light. However, the owner is also required to provide a justification.

- Preliminary questions ("preparatory questions"): They are used to determine which of the subsequent questions are really necessary for the project. Depending on the selection, certain questions will be shown or hidden further down the form (if no personal data is processed, for example, the questions about data protection do not have to be answered).

- Carrying out the risk assessment: This is done by answering each of the respective question with "Yes" or "No" (or "N/A" if it does not seem applicable). For example, one question asks whether people are informed about the use of AI where this appears to be important. If this does not appear to be important anywhere or if people are actually informed, this question can be answered with "Yes ". For other questions, a preliminary check by your experts will be necessary. This is also the case when questions are asked that require knowledge of the relevant contracts (e.g., whether the data processing agreement is in line with data protection law). If the answer indicates a risk, a warning is displayed and the matter should be referred to the internal experts ("2nd line", definition see above). They will have to assess the response and reason given for it and make a recommendation on how to deal with the situation. This is also entered into the form (e.g. "OK" or "Risk Decision"). Based on this, the owner must then make a decision on what to do, i.e. whether further measures are necessary (if so, they can be documented in the form, as well) or whether the remaining risk is accepted.

- Assessment period: The period for which the assessment is being carried out must be specified, subject to unscheduled adjustments. This only has an effect on the calculation of the date of the recommended resubmission shown at the end.

- Overall risk assessment ("Overall risk level"): As a final step, the owner is asked to provide an overall risk assessment. This is ultimately a subjective assessment based on the individual assessed risks listed in the form above. It is intended to motivate reflection on the project as a whole. This risk assessment can also be entered in the records of AI applications (ROAIA; the GAIRA Excel contains a template for this).

- Further guidance: For some questions or criteria, we have included explanations in a small note that will appear when you move the mouse pointer over the small red triangle in the upper right corner of the relevant field.

GAIRA Light is currently only available in English. The above one-pager-forms are available also in German here.

If you need support, we are happy to help you.

Handling of projects with likely high risks

The assessment of such AI projects and applications is understandably more complex, time consuming and will not be possible without some form of expert support. However, we nevertheless recommend a systematic approach for quality and efficiency purposes.

We recommend the following six steps:

- The owner of the project or application should be asked to document it, i.e. explain what it is all about, how AI is used, provide provider contracts, describe the technical and organizational measures taken, etc.

- Examine whether the planned activity will be specifically covered by the EU AI Act, either as a prohibited practice or as a high-risk AI system, as this may trigger certain additional requirements.

- Explain why the planned activity in this form is proportionate and necessary, at least with regard to the use of personal data. This is only necessary for the purposes of a data protection impact assessment (if carried out at the same time as and as part of the general AI risk assessment).

- Compile and list the technical and organizational measures ("TOM") that have been or are to be taken to avoid or mitigate risks or to comply with the relevant legal requirements (e.g., contracts with service providers, information security measures, instructions to users, specific configurations of the AI system used, tests).

- Assess for all relevant areas (e.g., model, input, output) and topics (e.g. data protection, confidentiality, intellectual property) whether and which residual risks still exist despite the TOM that have been implemented and planned, and why. A distinction must be made between risks for the company (financial risks, reputational risks, regulatory or criminal law risks) and risks for the persons affected (any unintended negative consequences). The assessment of the risks for the data subjects can also serve as a basis for the data protection impact assessment that is usually required for such projects anyway. For each risk, the owner of the application should consider whether the risk can be further reduced or even eliminated with additional measures. If so, the corresponding measure must be added to the list of TOM (and of course implemented accordingly). It should already be considered in the risk assessment.

- Once the risk assessment has been carried out, a final assessment must be made and, in particular, it must be checked that none of the identified risks are unacceptably high for the company or the data subjects. In the event of high risks for data subjects due to the processing of their personal data, the project must also be submitted to the data protection supervisory authority (although this almost never occurs).

We have also developed a suitable template for carrying out and documenting the above process. It is available to download here free of charge as an Excel worksheet under the name "GAIRA Comprehensive" and contains an example (based on an imaginary AI project). The template also contains a worksheet for checking the legal compliance of AI projects. This, however, is optional and does not need to be completed in our view, as most of these questions will be anyhow addressed during the risk assessment (when discussing the TOM) or as part of the usual compliance procedures.

In practice, experience has shown that the following points must be observed when using GAIRA Comprehensive (although it also contains instructions and explanatory notes):

- How to complete it: It is the owner of the AI application in question who has to complete GAIRA Comprehensive. This particularly applies to the risk assessment. However, it is clear that this person will not be able to reasonably assess some of the risks alone due to a lack of expert know-how on the relevant topics (data protection, copyright, information security, etc.) and thus needs the support of specialists such as the legal department or the data protection officer. There are two approaches here: The owner can either complete the form himself to the best of their knowledge and only then discuss it in a workshop with the experts (they should receive it in advance for review) and complete it thereafter. Or the form can be completed together in cooperation in the first place, whereby the decision on the risk assessment must ultimately always remain with the owner, never with the experts. This second approach takes more time for all those involved, but is easier for the owner.

- Too many/too few risks: The GAIRA Comprehensive template lists a whole series of AI risks as suggestions, sorted according to risk areas and topics. For certain projects, some of these risks will be irrelevant, for other projects the list provided may appear incomplete. In the former case, the relevant risks can simply be skipped by selecting the risks or probability of occurrence as "N/A". In the latter case, you can add your own risks in each area. If it is a data protection risk that is also to be taken into account for the purposes of the DPIA, the abbreviation "DP" (Data Protection) must be entered in the "Risk area" column. At the end of the respective row the form will then also indicate whether the overall data protection risk for the data subjects is low, medium or high.

- Measures: It is normal that not all TOM will be defined at the beginning and that the owner will struggle to think of every TOM. Usually, many TOM come into play ad hoc and at random. Having a list provides a more systematic approach and thus helps to ensure that fewer TOM are forgotten (the template also contains some examples of TOM for inspiration). Some measures will only be added to the list when doing the risk assessment, for example because the owner of the application concludes that the existing TOM do not sufficiently address the risk at issue and, therefore, further TOM are required. This is not a flaw in the process, but rather the purpose of a systematic risk assessment as GAIRA Comprehensive provides for: By systematically working through all potential risks, it is particularly easy to determine where measures are still lacking and to plan them. At the end, GAIRA Comprehensive will contain a list of all necessary (or rejected) measures. This helps to maintain an overview.

- Data protection impact assessment: Many AI projects also require a DPIA. We have therefore designed GAIRA Comprehensive in such a way that the DPIA can be carried out as part of the general risk assessment. This saves time and effort. However, it is still possible to carry out the DPIA separately (e.g. using the VUD template). In these cases, it can be indicated in the first part of the GAIRA form that the subsequent assessment does not include a DPIA or that this will be documented separately.

- Operational risks: AI applications are IT applications and therefore also use corresponding IT systems. These can entail their own, non-AI-related risks, for example with regard to information security or business continuity. When GAIRA Comprehensive is completed, it is advisable to define from the outset whether these and other operational, non-AI-related risks should also be assessed with GAIRA. This will often not be the case, especially if existing IT infrastructure is being used for the AI application; its operational risks may already be well known. In GAIRA Comprehensive, this can be declared at the start of the risk assessment. It is important to be clear about this point so that all participants know exactly what the risk assessment covers.

- Assessment period: Every risk assessment must be carried out for a defined period of time, never for eternity. In GAIRA Comprehensive, this period can be specified at the start of the risk assessment. It will typically be one to three years.

- Risk matrix and levels: The scale or terms for describing the probability and risk exposure used in GAIRA Comprehensive can be "adjusted" to meet the company-specific terms. The lists and colored fields at the end of the risk assessment in "Step 4" are used for this purpose. The texts in the gray fields can be adapted. However, this should be done before the risk assessment is carried out; a subsequent adjustment does not update any portions of the risk assessment that have already been carried out. The color in the fields are applied automatically. However, the value in the gray field to the right indicates the value from which there is an increased risk ("yellow") or when there is a "medium" and "high" risk from a data protection perspective.

- Assessment status: The status of the assessment can be documented at the very beginning of GAIRA Comprehensive. GAIRA Comprehensive is usually not completed in one go, but typically over a period of several days, because various pieces of information have to be collected and various departments will be involved. Responsibility should lie with the owner of the project, even if the practical implementation has been delegated to a project manager.

We are happy to support you in carrying out such risk assessments and especially the workshops mentioned above. This can be particularly helpful when you do them for the first time.

We hope that these explanations will help in practice to efficiently and effectively assess small and large AI projects with regard to their legal and other risks. GAIRA is open source and benefits from feedback from the tool's users. We are happy to receive such feedback, as well as suggestions for improvements – and we thank all those who have already contributed to making the tool better for the benefit of the entire community. However, it is currently only available in English.

We also want to point out to other initiatives that can help organizations to better manage the risks of using AI, such as the widely cited AI Risk Management Framework of NIST, which is available here for free. It has a different, broader scope than GAIRA and is, in essence, a blue print for establishing a risk management system concerning AI (whereas GAIRA is in essence a checklist of risks and points to consider in a specific project and a tool to document the outcome). They do not compete, but work hand in hand. The AI RMF of NIST is, however, very comprehensive and complex and will, in our experience, overwhelm many organizations. We have seen this also with GAIRA, which is simpler and more limited, but in its initial version (today GAIRA Comprehensive) was too much for many projects, which is why we created GAIRA Light. And some would still consider it quite detailed.

In the next article in our blog post series, we will discuss recommendations for effective governance of the use of AI in companies.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.