This application concerns the field of man-machine interaction for users interacting with digital networks. Here are the practical takeaways from the decision T 0929/15 (Identifying a user issuing a voice request/ACCENTURE) of 17.11.2020 of Technical Board of Appeal 3.5.05:

Key takeaways

- Reliably and conveniently mapping a voice request to the user who issued the request is a technical problem.

The invention

This European patent application concerns a computer network and a server for natural language-based control of a digital network. In particular, the invention has a view towards digital home networks comprising a plurality of devices such as a personal computer, a notebook, a CD player, a DVD player, a Blu-ray Disc" playback device, a sound system, a television, a telephone, a mobile phone, an MP3 player, a washing machine, a dryer, a dish washer, lamps, and/or a microwave, etc.

According to the application, such increasingly complex digital networks lack a unified and efficient way to be managed and controlled by users. Furthermore, digital networks require a user to learn and to interact with a plurality of different, often heterogeneous, user interfaces in order to satisfactorily interact with the different devices associated in a digital network.

The invention therefore sets out to provide for improved man-machine interaction for users interacting with digital networks.

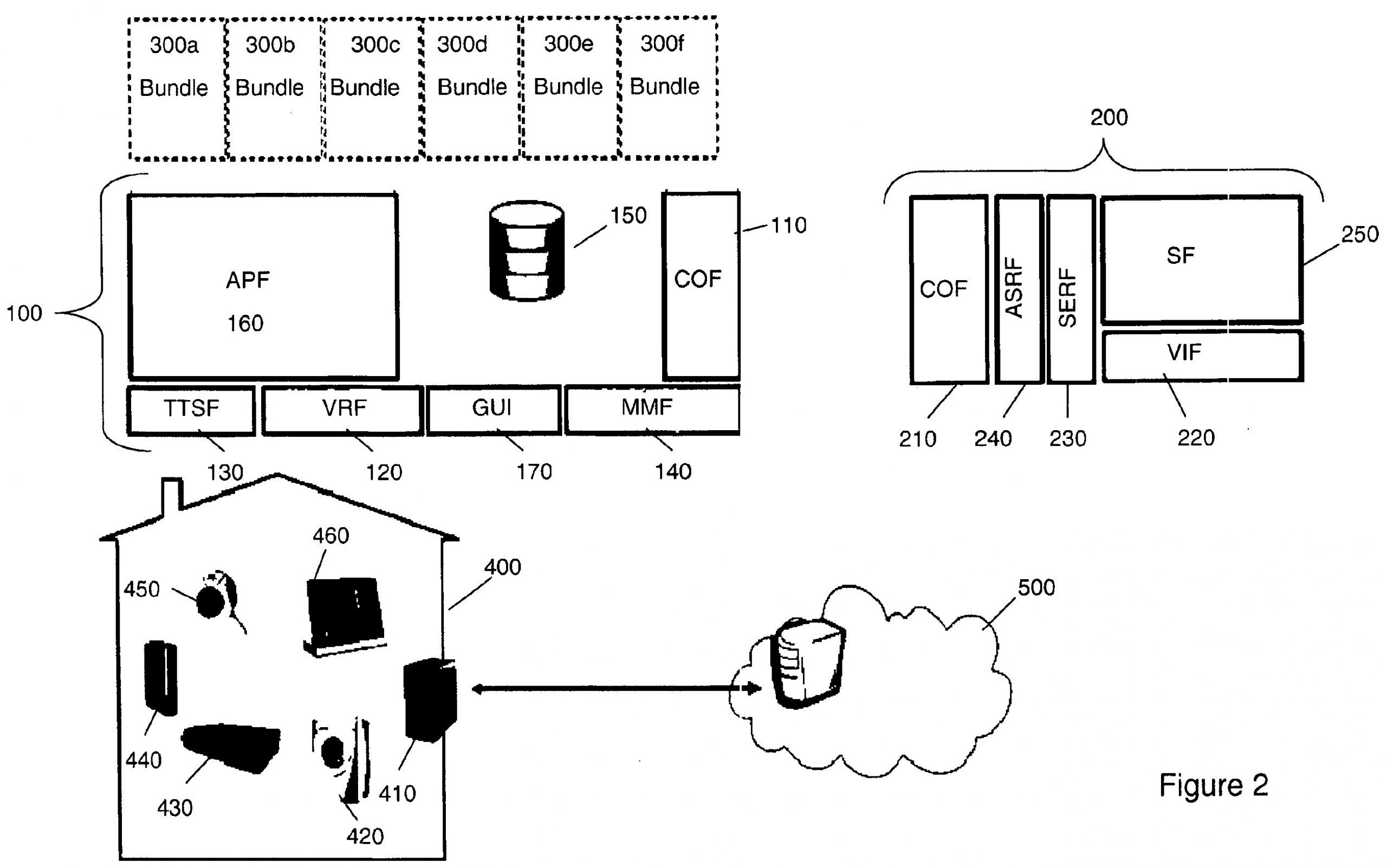

Fig. 2 of EP 2 498 250

Here is how the invention was defined in claim 1:

Claim 1 (main request)

Computer network for natural language-based control of a digital home network (400), the network comprising:a digital home network (400) comprising a plurality of devices (410, 420, 430, 440, 450, 460) and operable to provide sharing of Internet (500) access between the plurality of devices (410, 420, 430, 440, 450, 460);

a client (100) installed in the digital home network (400) comprising:

a unified natural language interface operable to receive a natural language-based voice user request (12) for controlling the digital home network (400)

using natural language; and

a module management component (140) operable to provide an interface to a plurality of software agents (300a, 300b, 300c, 300d, 300e, 300f) for publishing one or more actions offered by the software agents (300a, 300b, 300c, 300d, 300e, 300f) to the client (100); and

a server (200) connected to the client (100) over the digital home network (400) operable to process the natural language-based voice user request (12) received from the client (100); and

the software agents (300a, 300b, 300c, 300d, 300e, 300f), wherein each of the software agents (300a, 300b, 300c, 300d, 300e, 300f) is operable to execute at least one action on at least one of the plurality of devices (410, 420, 430, 440, 450, 460) which is controlled by said corresponding one of the software agents (300a, 300b, 300c, 300d, 300e, 300f) based on a command received by the module management component (140) of the client device (100);

wherein the server (200) is operable to process the natural language-based user request (12) received from the client (100) resulting in a processed user request (12) comprising a list of tags and an identification of a user (10) that provided the user request (12) identified by a voice identification component (220) of the server, wherein the user is identified based on the natural-language based user request (12) and wherein the voice identification component (220) is operable to identify users issuing user requests by:

– processing incoming voice samples of each user request;

– extracting features from the incoming voice samples; and

– matching the extracted features against voice prints of users stored in a database;

wherein, based on the processed user request (12) received from the server (200), the client (100) is operable to select a target device of the plurality of devices (410, 420, 430, 440, 450, 460) and a corresponding action to be performed;

wherein the client (100) is operable to perform the action by forwarding a corresponding command to the module management component (140);

wherein the module management component (140) is operable to trigger a corresponding software agent to perform the action on the target device (410, 420, 430, 440, 450, 460) which is controlled by said corresponding one of the software agents (300a, 300b, 300c, 300d, 300e, 300f);

wherein the action serves the natural language-based voice user request (12);

wherein the client (100) comprises further, a graphical user interface, GUI, (170) operable to be exposed to the user (10) for specifying user-defined settings of actions to be executed by the plurality of software agents (300a, 300b, 300c, 300d, 300e, 300f) on at least one of the plurality of devices (410, 420, 430, 440, 450, 460); and

wherein the client is operable to expose a single point of contact through the module management component (140) to the software agents (300a, 300b, 300c, 300d, 300e, 300f) by providing services to the software agents (300a, 300b, 300c, 300d, 300e, 300f) in order to allow the software agents (300a, 300b, 300c, 300d,

300e, 300f) to access the GUI via the module management component (140).

Is it patentable?

According to the board of appeal, claim 1 differed from the closest prior art in that the processing of a natural language-based voice request, besides producing a list of tags, also results in the identification of the user that provided the request. In particular, the user is identified by processing incoming voice samples of each request, extracting features therefrom and matching the extracted features against stored voice prints of users.

The board accepted that this features produces a technical effect as follows:

This distinguishing feature has the technical effect that each voice request is associated with the identity of the user who issued it, without the need for the user to explicitly input their identity.

The objective technical problem solved by this feature can thus be regarded as how to reliably and conveniently map a voice request to the user who issued the request.

The solution suggested in claim 1 was to biometrically identify the user who issued the voice request, based on features of the voice sample. The board concluded that this solution was not rendered obvious by the available prior art:

D4 suggests in several paragraphs, notably [0089] and [0092], that a group of users can each have a respective companion, or make use of a central companion running multiple processes for each user. However, it is silent on the complications that may arise in these scenarios, in particular how the companion(s) is/are to map a received voice request to a particular user. The appellant convincingly argued that even though D4 suggests, notably in paragraph [0094], that user authentication be required in order to access a companion – an authentication which might also take the form of an audio signature or a biometric signature – D4 consistently teaches (e.g. paragraph [0035]) that in such cases user identification is a precondition for accepting any request from the user. This teaches away from identifying the user while the received request is being processed.

Therefore, the claimed solution would not be obvious to the skilled person based on D4 alone. Nor would the skilled person be guided by the remaining documents on file to arrive at the solution in claim 1, since these documents are either entirely silent on providing a voice user interface or do not suggest user identification based on voice.

Therefore, the board remitted the case to the examining division with the order to grant a patent.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.