On February 2, 2024, the European Union enacted the Artificial Intelligence (AI) Regulation, referred to as the AI Act. This legislation aims to establish a stringent legal framework governing the development, marketing, and utilisation of artificial intelligence within the region, thereby marking a significant advancement in the regulation of this burgeoning domain.

What is the purpose?

This new regulation aims to establish harmonised rules to ensure that AI systems in the EU are safe and respect fundamental rights, while also fostering investment and innovation in the field of AI.

Who does it apply to?

The AI Act directly impacts businesses operating within the EU, whether they are providers, users, importers, distributors, or manufacturers of AI systems. The legislation provides clear definitions for the various actors involved in AI and holds them accountable for compliance with the new rules. This means that all stakeholders must ensure that their AI practices comply with the requirements outlined in the AI Act.

The European Union's AI Act also applies extraterritorially to companies not established on EU territory, provided they supply goods or services to EU consumers or process data relating to individuals located in the EU. Consequently, companies operating outside the EU territory may be subject to the provisions of the AI Act if they carry out AI-related activities involving EU users or data.

What are the requirements?

Among the key requirements of the AI Act:

- the need for companies to compile an inventory of their current AI models. Organisations that do not yet have a model repository should assess their current status to understand their potential exposure. Even if they are not currently using AI, it is highly likely that this will change in the coming years. An initial identification step can begin from an existing software/applications catalogue or, in its absence, through surveys conducted among various departments, mainly IT and risk departments.

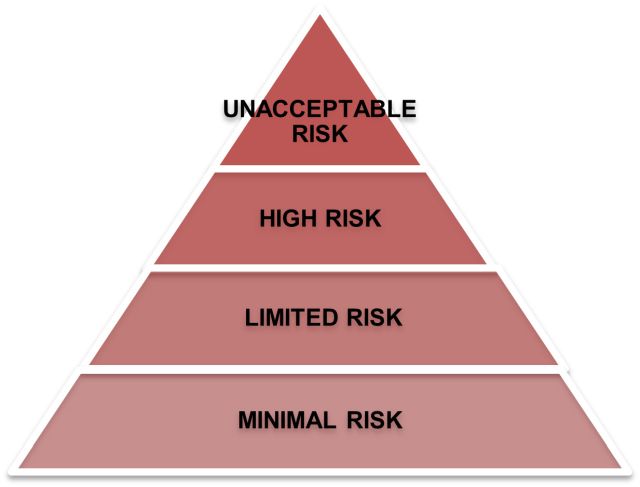

- Classify according to associated risks. AI models deemed to

pose high risks must comply with strict requirements and undergo a

conformity assessment before being placed on the market. The

regulatory framework defines four levels of risk concerning

AI:

- Companies must establish appropriate governance and monitoring measures to ensure that their AI systems adhere to the ethical and legal standards defined by the AI Act.

Companies must prepare and ensure that their AI practices comply with these new regulations. To initiate the process of full compliance with the AI Act, the following steps are necessary:

- assess the risks associated with your AI systems;

- raise awareness;

- design ethical systems;

- assign responsibilities;

- stay updated; and

- establish formal governance.

By taking proactive measures now, the AI systems will be compliant with the new regime when the AI Act comes into force, the organisation will be able to continue using them and obviously avoid potential significant sanctions.

What are the sanctions?

The penalties for non-compliance with the AI Act are significant. They range from €10 million to €40 million or from 2% to 7% of the company's global annual turnover, depending on the severity of the infringement. Therefore, it is crucial for companies to ensure that they fully understand the provisions of the AI Act and comply with its requirements to avoid such sanctions.

In summary, the AI Act aims to establish a robust regulatory framework for AI, guaranteeing both safety and respect for fundamental rights while promoting innovation and competitiveness for companies operating in the EU. Companies need to take proactive steps to comply with this new legislation and ensure ongoing compliance of their AI practices.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.